Here at Navixy, we are often asked by our customers how the process of providing services is arranged from the server side. For example, what to expect in case one of the system’s components fails, is there any protection against downtime or data loss, how do we cope with issues related to intermediate network nodes, and so on.

In this post, we will take you under the hood of Navixy architecture. We will not delve into the technical details but will reveal some of the operational features of the system to show how it actually works.

Fault tolerance and reliability

Since Navixy platform provides services to customers around the world in a 24/7 manner, its seamless operation and reliability must be at the forefront. Therefore, it is natural that our potential customers come up with the following inquiries at the stage of deciding whether or not to connect to the system: “How fault-tolerant and reliable is the system?”; “What methods are used for backup?”.

To reduce the risks of downtime, the system is segmented into several regional nodes, which, despite being interconnected, do not depend on each other. Each regional node is built according to a common, fault-tolerant template, while the number of servers performing separate functions is chosen based on the workload processing requirements for each of the nodes. In terms of SLA (Service Level Agreement), we are committed to keeping our target uptime indicator at the level of no less than 99.99%, i.e. 52.56 minutes of downtime per year. As of 2020, our uptime has not dropped below 99.999%.

It was back then at the stage of designing the system architecture when we opted to segment it into regional nodes. This seemed to be the easiest way to reduce network delays for customers accessing the system from various geographic locations, as well as it helped to lower the impact of regional technical problems on overall stability. During that period we witnessed difficulties that other global providers had come across, resulting in disruptions in the network connectivity of countries and entire continents. With that in mind, today we are doing our best to mitigate such risks.

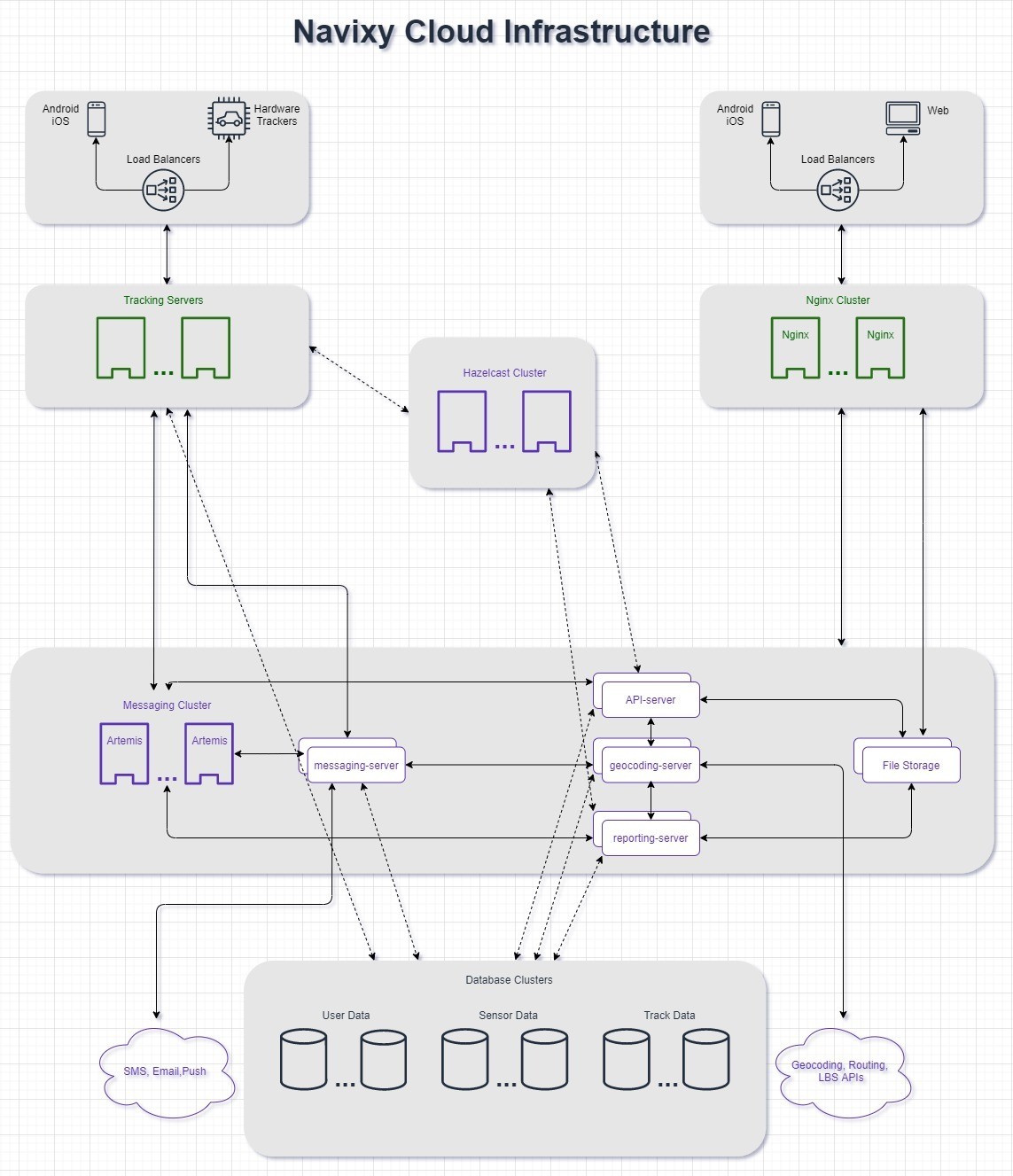

Now let's move on to a diagram illustrating how regional nodes are arranged within Navixy.

Apart from fault-tolerance, we regularly run backups and replicate all the key components that are needed for the continuous provision of the services. Since we use Amazon AWS infrastructure, the backup scheme includes both the launch of a number of virtual servers to perform specific functions and their distribution across several data centers within a geographic point of presence (PoP). For example, a regional node in Frankfurt is spread over three independent data centers, while all databases are replicated in a continuous manner.

We also back up all key servers on a daily basis. This includes backups of databases, virtual disks, or entire servers.

It should be highlighted that each server performs a separate function. This means, should one go down, the rest of the network will continue functioning unaffected. In such a case, we immediately deploy a similar server from a prepared template and automatically upload the current version of any of our software components to it. Our CD/CI infrastructure allows to get the latest ready-to-go version of any of the components and deploy it to any of the servers. The deployment procedure is triggered automatically through ansible.

Increasing global availability

In addition to high levels of reliability, we are committed to providing our customers with high-speed access to our services. The system with distributed regional nodes allows us to locate our services closer to the end consumer. However, even when implementing this scheme, there are regions, which may have limited access due to congested communication lines or significant distances. To mitigate these factors, we take advantage of the CloudFlare CDN content distribution network, which has proven to perform better than simply transferring data directly to the client over the Internet. Our tests have shown that this approach can reduce latency by 50% when loading client portals. In mid-September 2020, we completed the switch to CloudFlare CDN for all Navixy clients.

As working with CloudFlare may include some challenges, we are free to enable or disable the CloudFlare CDN “on the go” for all the users or specific customer groups. During any switchover whether it`s switching to a backup server, database, or any service — the switchover is seamless and not noticeable by our users.

Stability and security

We are constantly keeping a sharp eye on our distributed infrastructure to guarantee its stability. To get a holistic view of the current state of all the infrastructure components and guarantee their seamless operation, we employ the following channels:

- In-house monitoring system.

- An external service checking the availability of public components.

- Built-in Amazon services reporting problems with Amazon infrastructure and running virtual servers.

As for system security, we employ strict policies and procedures encompassing the security and confidentiality of customer data. Access to regional nodes is kept under continuous monitoring and restricted to authorized personnel. Access control lists limit the access to our cloud infrastructure from authorized IP-addresses only.

Conclusion

Processing traffic from hundreds of thousands of GPS devices and receiving millions of customer requests on a daily basis, the architecture of Navixy services remains simple to implement and offers great opportunities for scalability. Our network topology allows us to linearly increase the performance of any of our nodes, as well as easily add an additional one if needed.

Our top priority is to provide fault-tolerant, reliable, affordable, stable, and secure services to our customers. We will continue to work hard to provide you with technical solutions that correspond to the established standards.

We hope you found this post helpful and learned something new. For any further queries on this topic, feel free to reach us at sales@navixy.com. And as usual, you are always welcome to share your thoughts on the service architecture in the comment section below.